This year is the 25 anniversary for when I passed out from Air Force Bal Bharati School (AFBBS). I was there for two years and they were some of the most fun and memorable times of my life. During my time there I made some lifelong friends and learnt a lot about life and the good stuff. I was in the computer section, XII-C or “The Class with a Class” as it was otherwise known.

XII-C The Class with a Class

A few months ago a group of people from one of the other sections in our batch started a thread about having a reunion and started working on it. Over the next few weeks I noticed that it was mostly folks from the other sections who were interested and actively planning for the reunion, our class was mostly silent on the topic. Since I was not much in touch with anyone from any of the other sections, or rather was never friends with anyone outside of the C section (my class) I was of two minds about attending the reunion. Then we found out that the event was not happening at the school and instead was in a place in Qutub Minar area somewhere.

Then someone asked a question in our class group if anyone else was going. Interestingly most of the folks said that they would rather meet separately with just our class instead of the whole batch. After seeing that me and Tarini got talking about it and thinking about a separate reunion for our class. Tarini was nice enough to volunteer her place for the event and thus we decided to have our get-together on the same day as the other one.

The event was planned for the 16th, and Jani and I flew in early morning that day to reach Delhi by 2:30pm. Since it would have taken us too long to go to Noida and come back (we would have spent the entire time traveling instead of spending time at home) we decided to show up early at Tarini’s place and start the party early. That way we got to spend time with the kids and Aunty as well before the rest of the folks arrived.

The evening was quite memorable with a lot of old stories being told to spouses about folks who I hope didn’t get into too much trouble once their wives found out what they had been up to in school. Crushes were explained in detail, famous pranks explained etc. It was much more fun and intimate than attending a party with loud music, though that also would have been fun if we knew more people there.

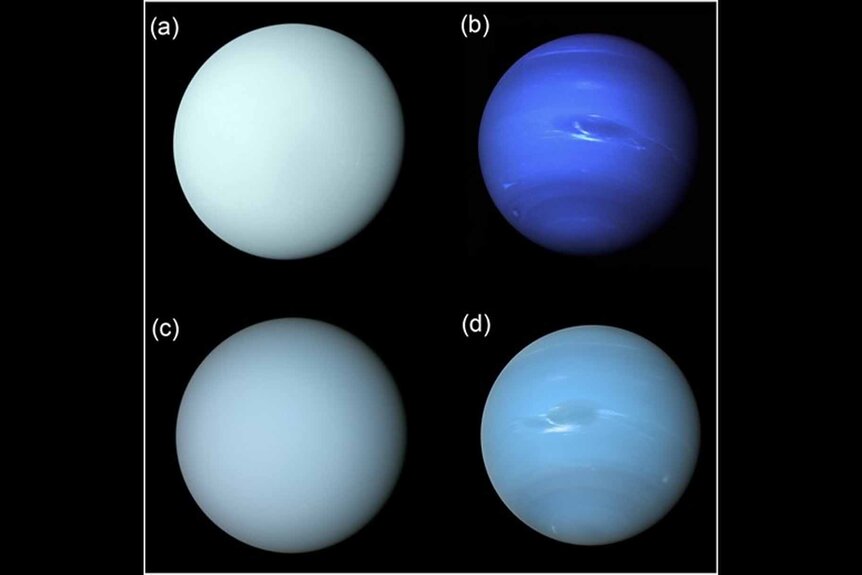

Its hard to believe that its already been 25 years since we all were in school together and we have gone from:

to looking like this 25 years later:

I wish I could say that we all kept in touch throughout the last 25 years, but sadly that wasn’t the case. I was meeting Ravi after 25 years, Ankush and Rahul after ~16 years or so and it was a lot of fun to catchup after such a long time. To be honest it didn’t feel like so much time had passed since we last met. The rest of the folks I had been meeting them more frequently; some more frequently than others. It would have been great if the rest of the 40 odd classmates could have also made it for the reunion but that’s ok. There is always a next time.

In spite of only getting back to Delhi the day before Tarini went above and beyond with the food and drinks. I especially loved the mulled wine and that reminds me that I need to get the recipe from her, or instead get her to make it for me everytime I visit. Actually the second option seems a lot more fun and less work for me so I will do that.

The party went on till almost 4am, then me Tarini and Jani chilled out for a while after everyone went home and just chatted. The next day was again hectic as we had a brunch and headed back to the airport to come back to Bangalore so that we could prep for our next trip to Malaysia which we were leaving for the next day (18th). Got back to India on the 25th and that is why this post was delayed as I was having too much fun there to write about the reunion.

Well this is all for now. Will write more later.

– Suramya